LLMs explained simply and quickly

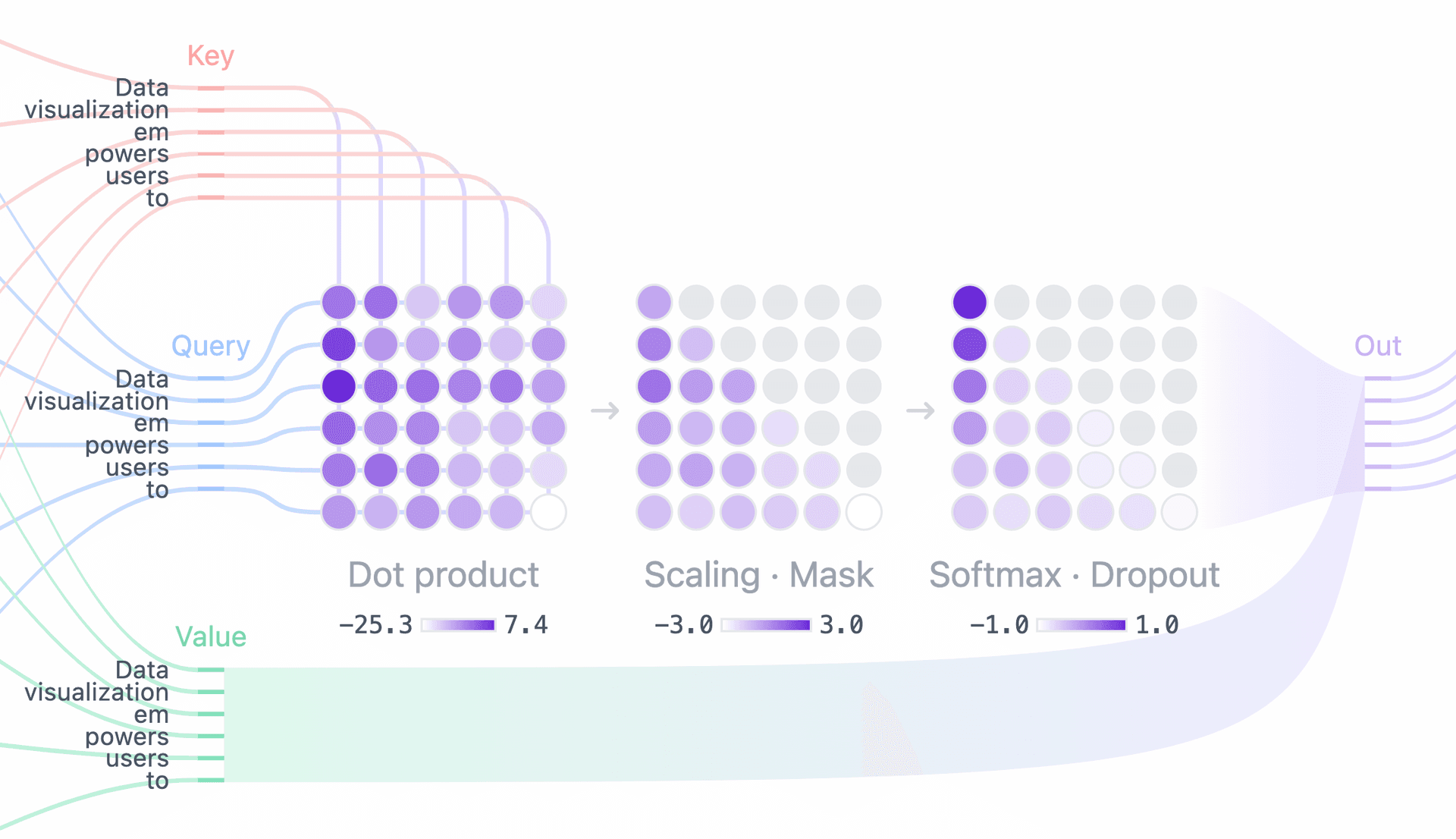

Large language models generate responses by predicting one token at a time, based on patterns they’ve learned from massive datasets. This explainer covers their core components: data, architecture, training, and how transformer models use context to simulate conversation.